MICO is shorthand for “Media in Context”. We are a research project partially funded by the European Commission 7th Framework Programme, and now approaching our final phase of development with a final version of components available in October 2016.

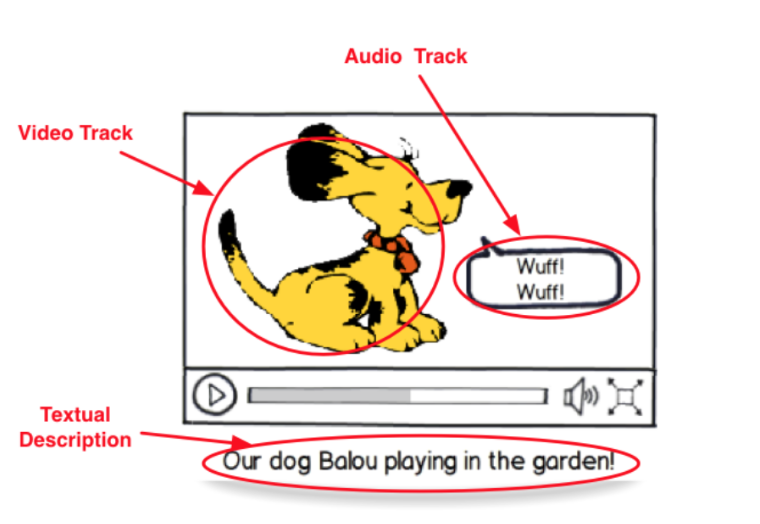

The main goal of the project is to provide meaning to cross-media content in the form of automatic annotations i.e. machine-readable code, in which as much of the context of the content is annotated as possible, regardless of whether the annotations come from analysing the text, audio, image or video elements of the composite cross-media content. We are fortunate in MICO to have a diverse group of ambitious use cases from Zooninverse (University of Oxford) and InsideOut10 to guide our development. Testing and tuning is further supported by showcases provided by Zaizi Ltd, Codemill, AudioTrust and Redlink.

The main goal of the project is to provide meaning to cross-media content in the form of automatic annotations i.e. machine-readable code, in which as much of the context of the content is annotated as possible, regardless of whether the annotations come from analysing the text, audio, image or video elements of the composite cross-media content. We are fortunate in MICO to have a diverse group of ambitious use cases from Zooninverse (University of Oxford) and InsideOut10 to guide our development. Testing and tuning is further supported by showcases provided by Zaizi Ltd, Codemill, AudioTrust and Redlink.

The final solution will be available as a software platform that takes cross-media content (e.g. image and surrounding text) as data input, extracts and aligns information within a configurable extraction process and provides cross-media output. This output is composed of unstructured data like media fragments as well as structured metadata. It is accessible over both, standardized interfaces (Linked Data, SPARQL) and custom json endpoints. A key component in the MICO platform is the configurable analysis process (known as “Broker”) that allows users to define workflows very fine-grained by selecting analyzers from a pool and combining them in various ways.

The final release of the MICO platform will be released this summer as a public demo for testing and open source software bundle.